Portfolio:Elements of Laboratory Technology Management

Title: Elements of Laboratory Technology Management

Author for citation: Joe Liscouski, with editorial modifications by Shawn Douglas

License for content: Creative Commons Attribution 4.0 International

Publication date: Originally published 2014; republished February 2021

NOTE: This was originally published on LIMSwiki.org and is reproduced here using the same license.

Introduction

This discussion is less about specific technologies than it is about the ability to use advanced laboratory technologies effectively. When we say “effectively,” we mean that those products and technologies should be used successfully to address needs in your lab, and that they improve the lab’s ability to function. If they don't do that, you’ve wasted your money. Additionally, if the technology in question hasn’t been deployed according to a deliberate plan, your funded projects may not achieve everything they could. Optimally, when applied thoughtfully, the available technologies should result in the transformation of lab work from a labor-intensive effort to one that is intellectually intensive, making the most effective use of people and resources.

People come to the subject of laboratory automation from widely differing perspectives. To some it’s about robotics, to others it’s about laboratory informatics, and even others view it as simply data acquisition and analysis. It all depends on what your interests are, and more importantly what your immediate needs are.

People began working in this field in the 1940s and 1950s, with the work focused on analog electronics to improve instrumentation; this was the first phase of lab automation. Most notably were the development of scanning spectrophotometers and process chromatographs. Those who first encountered this equipment didn’t think much of it and considered it the world as it’s always been. Others who had to deal with products like the Spectronic 20[lower-alpha 1] (a single-beam manual spectrophotometer), and use it to develop visible spectra one wavelength measurement at a time, appreciated the automation of scanning instruments.

Mercury switches and timers triggered by cams on a rotating shaft provided chromatographs with the ability to automatically take samples, actuate back flush valves, and take care of other functions without operator intervention. This left the analyst with the task of measuring peaks, developing calibration curves, and performing calculations, at least until data systems became available.

The direction of laboratory automation changed significantly when computer chips became available. In the 1960s, companies such as PerkinElmer were experimenting with the use of computer systems for data acquisition as precursors to commercial products. The availability of general-purpose computers such as the PDP-8 and PDP-12 series (along with the Lab 8e) from Digital Equipment, with other models available from other vendors, made it possible for researchers to connect their instruments to computers and carry out experiments. The development of microprocessors from Intel (4004, 8008) led to the evolution of “intelligent” laboratory equipment ranging from processor-controlled stirring hot-plates to chromatographic integrators.

As researchers learned to use these systems, their application rapidly progressed from data acquisition to interactive control of the experiments, including data storage, analysis, and reporting. Today, the product set available for laboratory applications includes data acquisition systems, laboratory information management systems (LIMS), electronic laboratory notebooks (ELNs), laboratory robotics, and specialized components to help researchers, scientists, and technicians apply modern technologies to their work.

While there is a lot of technology available, the question remains "how do you go about using it?" Not only do we need to know how to use it, but we also must do so while avoiding our own biases about how computer systems operate. Our familiarity with using computer systems in our daily lives may cause us to assume they are doing what we need them to do, without questioning how it actually gets done. “The vendor knows what they are doing” is a poor reason for not testing and evaluating control parameters to ensure they are suitable and appropriate for your work.

Moving from lab functions and requirements to practical solutions

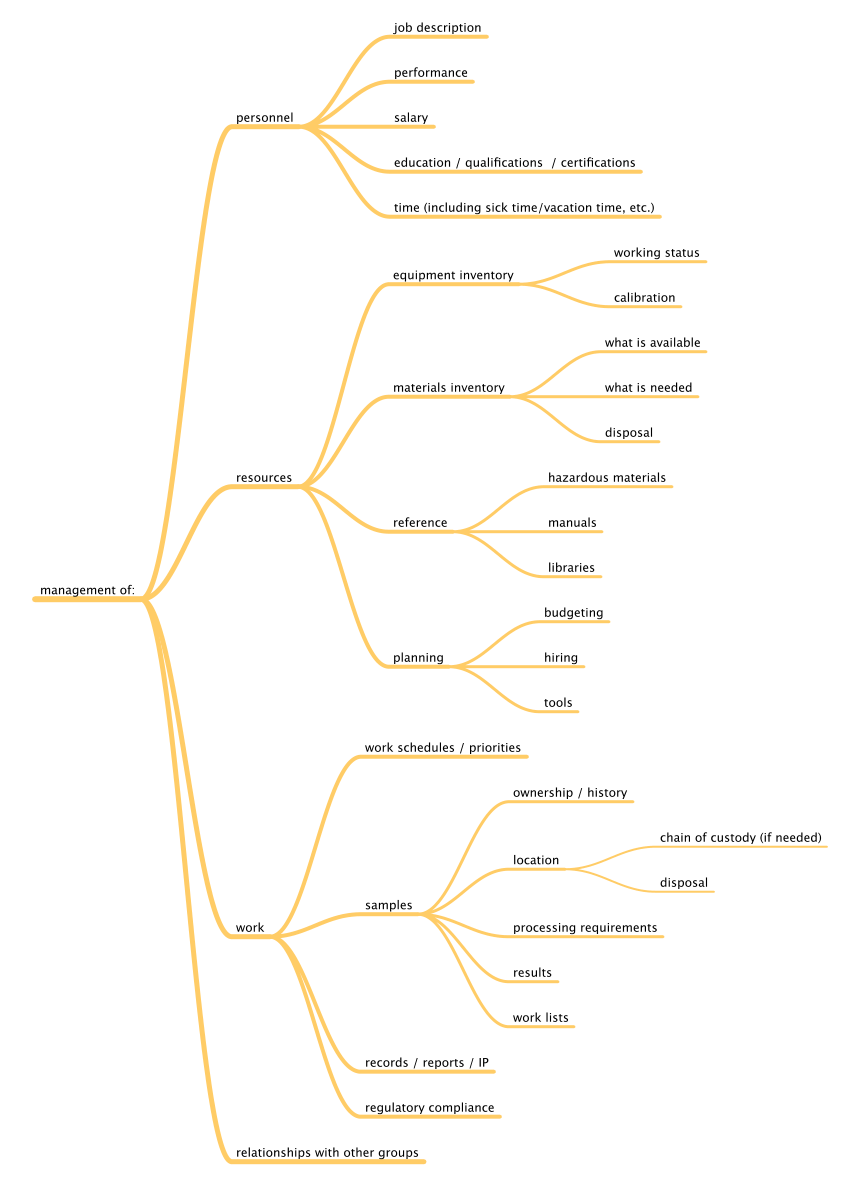

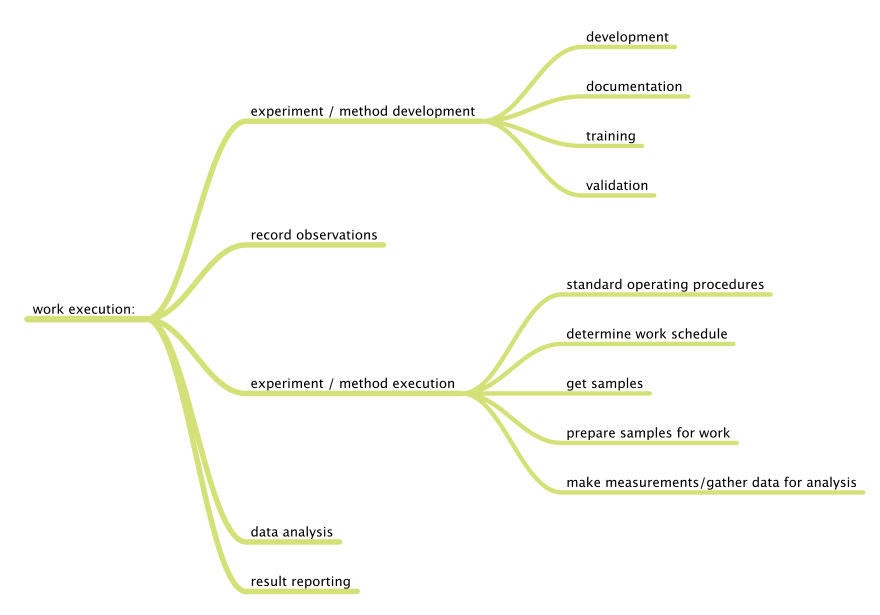

Before we can begin to understand the application of the tools and technologies that are available, we have to know what we want to accomplish, specifically what problems we want to solve. We can divide laboratory functions into two broad classes: management and work execution. Figure 1 addresses management functions, whereas Figure 2 addresses work execution functions, all common to laboratories. You can add to them based on your own experience.

|

|

Vendors have been developing products to address these work areas, and there are a lot of products available. Many of them are "point" solutions: products that are focused on one aspect of work without an effort to integrate them with others. That isn’t surprising since there isn’t an architectural basis for integration aside from specific hardware systems (e.g., Firewire, USB) or vendor-specific software systems (e.g., office product suites). Another issue in scientific work is that the vendor may only be interested in solving a particular problem, with most of the emphasis on an instrument or technique. They may provide the software needed to support their hardware, with data transfer and integration left to the user.

As you work through this document, you’ll find a map of management responsibilities and technologies. How do you connect the above map of functions to the technologies? Applying software and hardware solutions to your lab's needs requires deliberate planning. The days of purchasing point solutions to problems have passed. Today's lab managers need to think more broadly about product usage and how components of lab software systems work together. The point of this document is to help you understand what you need to think about in that regard.

Given those summaries of lab activities, how do we apply available technologies to improve lab operations? Most of the answers fall under the heading of "laboratory automation," so we’ll begin by looking at what that is.

What is laboratory automation?

This isn’t a trivial question; your answer may depend on the field you are working in, your experience, and your current interests. To some it means robotics, to others it is a LIMS (or their clinical counterpart, the laboratory information systems or LIS). The ELN and instrument data systems (IDS) are additional elements worth noting. These are examples of product classes and technologies used in lab automation, but they don’t define the field. Wikipedia provides the following as a definition[1]:

Laboratory automation is a multi-disciplinary strategy to research, develop, optimize and capitalize on technologies in the laboratory that enable new and improved processes. Laboratory automation professionals are academic, commercial and government researchers, scientists and engineers who conduct research and develop new technologies to increase productivity, elevate experimental data quality, reduce lab process cycle times, or enable experimentation that otherwise would be impossible. The most widely known application of laboratory automation technology is laboratory robotics. More generally, the field of laboratory automation comprises many different automated laboratory instruments, devices, software algorithms, and methodologies used to enable, expedite and increase the efficiency and effectiveness of scientific research in laboratories.

And McDowall offered this definition in 1993[2]:

Apparatus, instrumentation, communications or computer applications designed to mechanize or automate the whole or specific portions of the analytical process in order for a laboratory to provide timely and quality information to an organization

These definitions emphasize equipment and products, and that is where typical approaches to lab automation and the work we are doing part company. Products and technologies are important, but what is more important is figuring out how to use them effectively. The lack of consistent success in the application of lab automation technologies appears to stem from this focus on technologies and equipment—“what will this product do for my lab?”—rather than methodologies for determining what is needed and how to implement solutions.

Having a useful definition of laboratory automation is crucial since how we approach the work depends on how we see the field developing. The definition the Institute for Laboratory Automation (ILA) bases its work on is this:

Laboratory automation is the process of determining needs and requirements, planning projects and programs, evaluating products and technologies, and developing and implementing projects according to a set of methodologies that results in successful systems that increase productivity, improve the effectiveness and efficiency of laboratory operations, reduce operating costs, and provide higher-quality data.

The field includes the use of data acquisition, analysis, robotics, sample preparation, laboratory informatics, information technology, computer science, and a wide range of technologies and products from widely varying disciplines, used in the implementation of projects.

Why "process" is important

Lab automation isn’t about stuff, but how we use stuff. The “process” component of the ILA definition is central to what we do. To quote Frank Zenie[lower-alpha 2] (one of the founders of Zymark Corporation), “you don’t automate a thing, you automate a process.” You don’t automate an instrument; you automate the process of using one. Autosamplers are a good example of successful automation: they address the process of selecting a sample vial, withdrawing fluid, positioning a needle into an injection port, injecting the sample, preparing the syringe for the next injection, and indexing the sample vials when needed.

A number of people have studied the structure of science and the relationship between disciplines. Lab automation is less about science and more about how the work of science is done. Before lab automation can be considered for a project, the underlying science has to be done. In other words, the process that automation is going to be applied to must exist first. It also has to be the right process for consideration. This is a point that needs attention.

If you are going to spend resources on a project, you have to make sure you have a well-characterized process and that the process is both optimal and suitable for automation. This means:

- The process is well documented, people that use the process have been trained on that documented process, and their work is monitored to determine any shortcuts, workarounds, or other variations from that documented process. Differences between the published process and the one actually in use can have a significant impact on the success of a particular project design.

- The process’s “readiness for automation” has been determined. The equipment used is suitable for automation or the changes needed to make it suitable are known, and it can be done at reasonable cost. Any impact on warranties has been determined and found to be acceptable.

- If several process options exist (e.g., different test protocols for the same test question), they are evaluated for their ability to meet the needs of the science and to be successfully implemented. Other options, such as outsourcing, should be considered to make the best use of resources; is may be more cost-effective to outsource than automate.

When looking at laboratory processes, it's useful to recognize they may operate on different levels. There may be high-level processes that address the lab's reason for existence and cover the mechanics of how the lab functions, as well as low-level processes that address individual functions of the lab. This process view is important when we consider products and technologies, as the products have to fit the process, the general basis for product requirements. Discussions often revolve around LIMS and ELNs are one example, with questions being asked about whether one or both are required in the lab, or whether an ELN can replace a LIMS.

These and other similar questions reflect both vendor influence and a lack of understanding of the technologies and their application. Some differentiate the two types of technology based on “structured” (as found in LIMS) vs. “unstructured” (as found in ELNs) data. Broadly speaking, LIMS come with a well-defined, extensible, database structure, while ELNs are viewed as unstructured since you can put almost anything into an ELN and organize the contents as you see fit. But this characterization doesn’t work well either since as a user I might consider the contents, along with an index, as having a structure. This is more of an information technology approach rather than one that addresses laboratory needs. In the end, an understanding of lab processes is still required to resolve most issues.

LIMS are well-defined entities[lower-alpha 3] and the only one of the two to carry an objective industry-standard description. LIMS are also designed to manage the processes surrounding laboratory testing in a wide variety of industries (e.g., from analytical, physical, and environmental testing, to clinical testing, which is usually associated with the LIS). The lab behavior process model is essentially the same across industries and disciplines. Basically, if you are running an analytical lab and need to manage samples and test results while answering questions about the status of testing on a larger scale than what you can memorize[lower-alpha 4], a LIMS is a good answer.

At the time of this writing, there is no standardized definition of an ELN, though a forthcoming update of ASTM E1578 intends to rectify that. However, given the current hype about ELNs, any product with that designation is going to get noticed. Let’s avoid the term and replace it with a set of software types that addresses similar functionality:

1. Scripted execution systems – These are software systems that guide an analyst in the conduct of a procedure (process); examples include Velquest (now owned by Accelrys) products and the scripting notebook function (vendor's description) in LabWare LIMS.

2. Journal or diary system - These are software systems that provide a record of laboratory work; a word processing system might fill this need, although there are products with features specifically designed to assist lab work.

3. Application- or discipline-specific record keeping systems – These are software systems designed for biology, chemistry, mathematics and other areas that contain features that allow you to record data and text in a variety of forms that are geared toward the needs of specific areas of science.

This is not an exhaustive list of forms or functionality, but it is sufficient to make a point. The first, scripted execution, is designed around a process or, more specifically, designed to give the user a mechanism to describe the sequential steps in a process so that they can be repeated under strict controls. These do not replace a LIMS but can be used synergistically with one, or with software that duplicates LIMS capabilities (some have suggested enterprise resource planning or ERP systems as a substitute). The other two types are repositories of lab information: equations, data, details of procedures, etc. There is no general underlying process as there is with LIMS. They can provide a researcher with a means of describing experiments, collecting data, and performing analyses, which you can correctly view as processes, but they are unique to that researcher or lab and not based on any generalized industry model, as we see in testing labs.

Why is this important? These descriptions illustrate something fundamental: process dictates needs, and needs set requirements for products and technology. The “do we need a LIMS or ELN” question is meaningless without an understanding of the processes that operate in your laboratory.

The role of processes in integration

From the standpoint of equipment, laboratories are often a collection of instruments, computer systems, sample preparation stations, and other test or measurement facilities. One goal frequently stated by lab managers is that “ideally we’d like all this to be integrated.” The purpose of integration is to streamline the operations, reduce human labor, and provide a more efficient way of doing work. You are integrating the equipment and systems used to execute one or more processes. However, without a thorough evaluation of the processes in the lab, there is no basis for integration.

Highlighting the connection between processes and integration, we see why defining what laboratory automation is remains important. One definition can lead to purchasing products and limiting the scope of automation to individual tasks. Another will take you through an evaluation of how your lab works, how you want it to work, and how to produce a framework for getting you there.

The elements of laboratory technology management

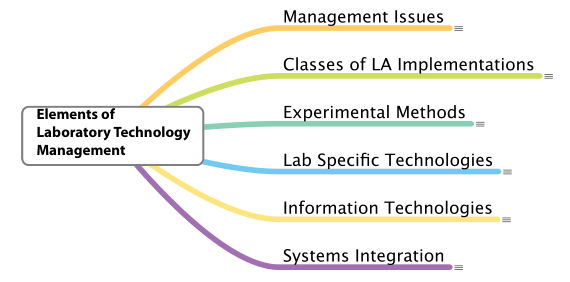

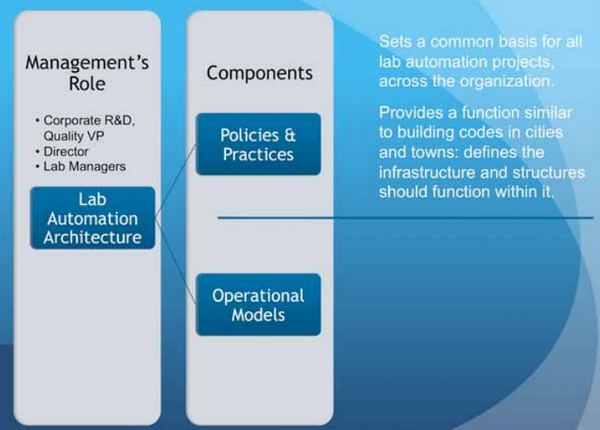

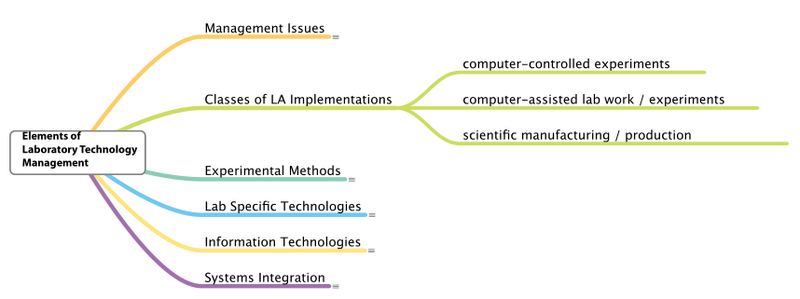

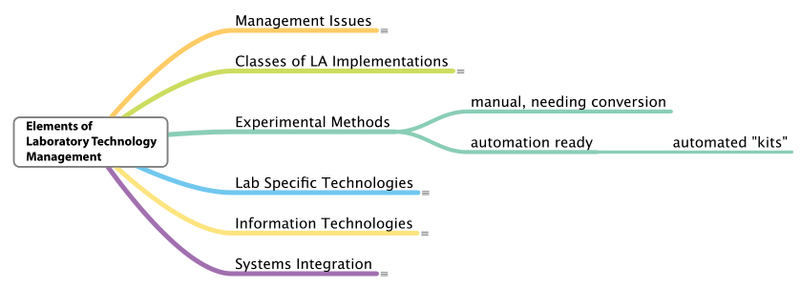

If lab automation is a process, we need to look at the elements that can be used to make that process work. The first thing that is needed is a structure that shows how elements of laboratory automation relate to each other and act as guides for someone coming into the field. That structure also serves as a framework for organizing knowledge about the field. The major elements of the structure are shown in Figure 3.

|

Why is it important to recognize these laboratory automation management elements? As automation technology sees broader appeal across multiple industries, there is inevitability to the use of automation technologies in labs. Vendors are putting chips and programmed intelligence into every product with the goal of making them easier to use, while reducing the role of human judgment (which can lead to an accumulation of errors in tasks like pipetting) and the amount of work people have to do to get work done. There are few negatives to this philosophy, aside from one point: if we don’t understand how these systems are working and haven’t planned for their use, we won’t get the most benefit from them, and we may accept results from systems blindly without really questioning their suitability and the results they produce. One of the main points of this work is that the use of automation technologies should be planned and managed. That brings us to the first major element: management issues.

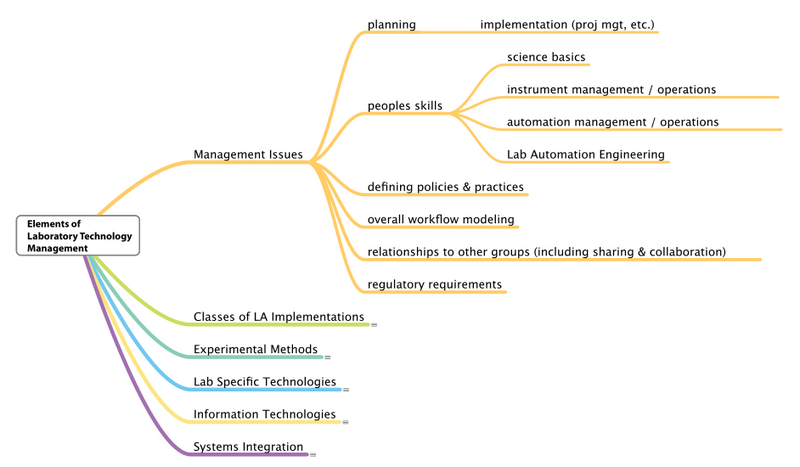

Management issues

The first thing we need to address is who “management” is. Unless the lab is represented solely by you, there are presumably layers of management. Depending on the size of the organization, this may mean one individual or a group of individuals who are responsible for various management aspects of the laboratory. Broadly speaking, those individuals will have a skillset that can appropriately address laboratory technology management, including project and program management, people skills, workflow modeling, and regulatory knowledge, to name a few (Figure 4). The need for these skillsets may vary slightly depending on the type of manager.

|

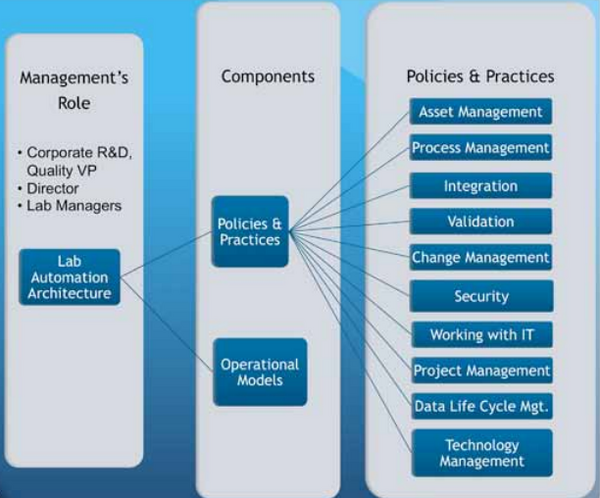

Aside from reviewing and approving programs ("programs" are efforts that cover multiple projects), “senior management” is responsible for setting the policies and practices that govern the conduct of laboratory programs. This is part of an overall architecture for managing lab operations, and has two components (Figure 5): setting policies and practices and developing operational models.[lower-alpha 5]

|

Before the incorporation of informatics into labs, senior management’s involvement wasn’t necessary. However, the storage of intellectual property in electronic media has forced a significant change in lab work. Before informatics, labs used to be isolated from the rest of the organization, with formal contact made through the delivery of results, reports, and presentations. The desire for more effective, streamlined, and integrated information technology operations, and the development of information technology support groups, means that labs are now also part of the corporate picture. In organizations that have multiple labs, more efficient use of resources results in a desire to reduce duplication of work. You might easily justify two labs having their own spectrophotometers, but duplicate LIMS doing the same thing is going to require some explaining.

With the addition of informatics in the lab, management involvement is critical to support the success of laboratory programs. Among the reasons often cited for failure of laboratory programs is the lack of management involvement and, by extension, a lack of oversight, though these failures are usually stated without clarifying what management involvement should actually look like. To be clear, senior management's role is to ensure that programs are conducted in a way that:

- are common across all labs, such that all programs are conducted according to the same set of guidelines;

- are well-designed, supportable, and can be upgraded;

- are consistent with good project management practices;

- are conducted in a way that allows the results to be reused elsewhere in the company;

- are well-documented; and

- lead to successful results.

When work in lab automation began, it was usually the effort of one or two individuals in a lab or company. Today, we need a cooperative effort from management, lab staff, IT support, and, if available, LAEs. One of the reasons management must establish policies and practices (Figure 6) is to enable people to effectively work together, so they are working from the same set of ground rules and expectations and producing consistent and accurate results.

|

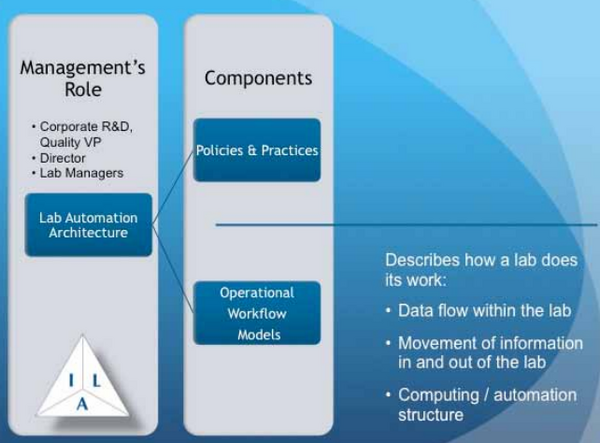

"Lab management" is responsible for understanding how their lab needs to operate in order to meet the lab's goals. Before automation became a factor, lab management’s primary concern was managing laboratory personnel and helping them get their work done. In the early stages of lab automation, the technologies were treated as add-ons that assisted personnel in getting work done. Today, lab managers need to move beyond that mindset and look at how their role must shift towards planning for and managing automation systems that take on more of the work in the lab. As a result, lab managers need to take on the role of technology planners in addition to managing people. The implementation of those plans may be carried out by others (e.g., laboratory automation engineers [LAEs], IT specialists), but defining the objectives and how the lab will function with a combination of people and systems is a task squarely in the lap of lab management, requiring the use of operational workflow models (Figure 7) to define the technologies and products suitable for their lab's work.

|

Problems can occur when different operational groups have conflicting sets of priorities. Take for example the case of labs and IT support. At one point, lab computing only affected the lab systems, but today's labs share resources with other groups, requiring "IT management" to ensure that work in one place doesn’t adversely impact another. Most issues around elements such as networks and security can be handled through implementing effective network design and bridges to isolate network segments when necessary. More often than not, problems are likely to occur in the choice and maintenance of products, and the policies IT implements to provide cost-effective support. Operating system upgrades are one place issues can occur if those changes cause products used in lab work to break because the vendor is slow in responding to OS changes. Another place that issues can occur is in product selection; IT managers may want to minimize the number of vendors it has to deal with and prefer products that use the same database system deployed elsewhere. That policy may adversely limit the products that the lab can choose from. From the lab's perspective, they need the best products in order to get their work done; from the IT group's perspective, they see it as driving up support costs. The way to avoid these issues, and others, is for senior managers to determine the priorities and keep the inter-department politics out of the discussion.

Classes of laboratory automation implementation

The second element of laboratory technology management to address is laboratory automation implementation. There are three classes of implementation to address (Figure 8):

- Computer-controlled experiments: This includes data collection in high-energy physics, LabVIEW implemented systems, instrument command and control, and robotics. This implementation class involves systems where the computer is an integral part of the experiment, doing data collection and/or experiment control.

- Computer-assisted lab work/experiments: This includes work that could be done without a computer, but machines and software have been added to improve the process. Examples include chromatography data systems (CDS), ELNs used for documentation, and classic LIMS.

- Scientific manufacturing: This implementation class focuses on production systems, including high-throughput screening, lights-out lab automation, process analytical technologies, and quality by design (QbD) initiatives.

|

Computer-controlled experiments

This is where the second phase of laboratory automation began: when people began using digital computers with connections to their instrumentation. We moved in stages from simple data acquisition, acquiring a few points to show that we can accurately represent voltages from the equipment, to collecting multiple data streams over time and storing the results on disks. The next step consisted of automatically collecting the data, processing it, storing it, and reporting results from equipment such as chromatographs, spectrophotometers, and other devices.

Software development moved from assembly language programming to higher-level languages programming, and then specialized systems that provide a graphical interface to the programmer. Products like LabVIEW[lower-alpha 6] allow the developer to use block diagrams to describe the programming and processing that have to be done, and provide the user with an attractive user interface with which to work. This is a far cry from embedding machine language programming in the BASIC language code, as was done in some earlier PC systems.

Robots offer another example of this class of work, where computers control the movement of equipment and materials through a process that prepares samples for analysis, and may include the analysis itself.

While commercial products have overtaken much of the work of interfacing, data acquisition, and data processing (in some cases, the instruments-computer combination are almost indistinguishable from the instruments themselves), the ability to deal with instrument interfacing and programming is still an essential skillset for those working in research and applications where commercial systems have yet to be developed.

It’s interesting that people often look at modern laboratory instrumentation and say that everything has gone digital. That’s far from the case. They may point to a single pan balance or thermometer with a digital readout as examples of a “digital” instrument, not realizing that the packaging contains sensors, analog-digital (A/D) converters, and computer control systems to manage the device and its communications. The appearance of a “digital” device masks what is going on inside; we still need to understand the transition from the analog world into the digital domain.

Computer-assisted lab work/experiments

There is a difference between work that can be done by computer and work that has to be done by computer; we just looked at the latter case. There’s a lot of work that goes on in laboratories that could be done and has been done by people, but in today’s labs we prefer to do that work with the aid of automated systems. For example, the management of samples in a testing laboratory used to be done by people logging samples in and keeping record books of the work that is scheduled and has been performed. Today, that work is better managed by a LIMS (or a LIS in the clinical world). The analysis of instrumental data used to be done by hand, and now it is more commonly done by instrument data systems that are faster, more accurate, and permit more complex analysis at a lower cost.

Do robots fit in this category? One could argue they are simply doing work that could be done manually performed in the past. The reason we might not consider robots in this class is that in many cases the equipment used for the robots is different than the equipment that’s used by human beings. As such, the two really aren’t interchangeable. If the LIMS or instrument data system were down, people could pick up the work manually; however, that may not be the case if a robot goes offline. It’s a small point and you can go either way on this.

The key element for this class of implementation is that the use of computers—and, if you prefer, robotics—is an option and not a requirement. Yet that option improves productivity, reduces cost, and provides better quality and more consistent data.

Scientific manufacturing

This isn’t so much a new implementation class as it is a formal recognition that much of what goes on in a laboratory mirrors work that’s done in manufacturing; some work in quality control is so routine that it matches assembly line work of the 1960s. The major difference, however, is that a lab's major production output is knowledge, information, and data (K/I/D). The work in this category is going to expand as a natural consequence of increasing automation, which must be addressed. If this is the direction things are going to go, then we need to do it right.

Recognizing this point has significant consequences. Rather than just letting things evolve, we can take advantage of the situation and drive situations that are appropriate for this level of automation into useful practice. This means we must:

- convert laboratory methods to fully automated systems;

- deliberately design and manage equipment and control, acquisition, and processing systems to meet the needs of this kind of application;

- train people to work in a more complex environment than they had been; and

- build the automation infrastructure (i.e., interfacing and data standards) needed to make these systems realizable and effective, without taking on significant cost.

In short, this means opening up another dimension to laboratory work as a natural evolution of work practices. If you look in the direction things are going in the lab, where large sample volume processing is necessary (e.g., high-throughput screening), it is simply a reflection of reality.

If we look at quality control applications and manufacturing processes, we basically see one production process (quality control or QC) layered on another (production), where ultimately the two merge into continuous production and testing. This is a logical conclusion to work described by process analytical technologies and quality-by-design.

This doesn’t diminish the science used in laboratory work; rather, it adds a level of sophistication that hasn’t been widely dealt with: thinking beyond the basic science process to its implementation in a continuous automated system. This is a much more complex undertaking since 1. data will be created at a high rate and 2. we want to be sure that this is high-quality data and not just the production of junk at a high rate.

This type of thinking is not limited to quality control work. It can be readily applied to research as well, where economical high-volume experiments can be used to support statistical experimental design methodologies and more exhaustive sample processing, as well as today’s screening applications in life sciences. It is also readily applied to environmental monitoring and regulatory evaluations. And while this kind of thinking may be new to scientific applications, it isn’t new technology. Work that has been done in automated manufacturing can serve as a template for the work that has to be done in laboratory process automation.

Let's return to the first two bullets in this section: laboratory method conversion and system management and planning. If you gave four labs a method description and asked them to automate it, you’d get varied implementations of four groups doing the same thing independently. If we are going to turn lab automation into the useful tool it can be, we need to take a different approach: cooperative development of automated systems.

In order to be useful, a description of a fully automated system needs more that a method description. It needs equipment lists, source code, etc. in sufficient detail that you can purchase the equipment needed and put the system together expecting it work. However, we can do better than that. In a given industry, where labs are doing the same testing on the same types of samples, we should be able to have them come together and designate and test automated systems to meet the need. Once that is done, vendors can pick up the description and be able to build products suitable to carry out the analysis or test. The problem labs face is getting the work done at a reasonable cost. If there isn’t a competitive advantage to having a unique test, cooperate so that standardized modules for testing can be developed.

This changes the process of lab automation from a build-it-from-scratch mentality to the planned connection of standardized automated components into a functioning system.

Experimental methods

There is relatively little that can be said about experimental methods at this point. Aside from the clinical industry, not enough work has been done to give really good examples of intentionally designed automated systems that can be purchased, installed in a lab, and expected to function.[lower-alpha 7] There are some examples, including ELIZA robotics analysis systems from Caliper Life Sciences and Pressurized Liquid Extraction Systems from Fluid Management Systems. Many laboratory methods are designed with the assumption that people will be doing the work (manually), and any addition of automation would require conversion of that method (Figure 9).

|

What we need to begin doing is looking at the development of automated methods as a distinct task similar to the published manual methods by ASTM International (ASTM), the Environmental Protection Agency (EPA), and the United States Pharmacopeia (USP), with the difference being that automation is not viewed as mimicking human actions but as well-designed and optimized systems that support the science and any associated production processes (i.e., a scientific manufacturing implementation that includes integration with informatics systems). We need to think “bigger,” without limiting our vision to just the immediate task but rather looking at how it fits into lab-wide operations.

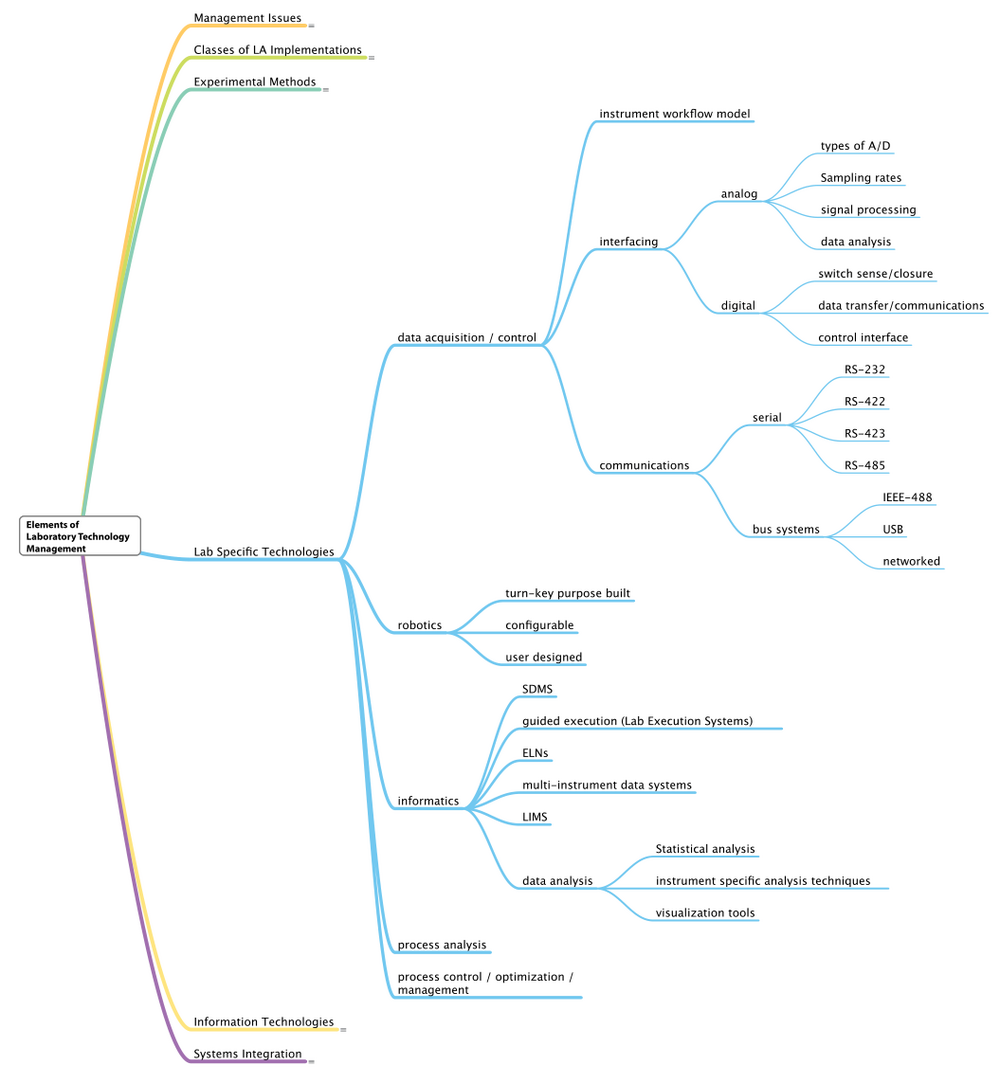

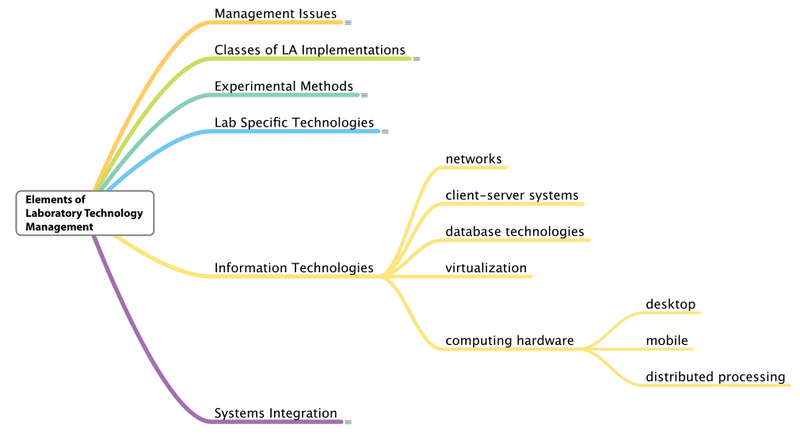

Lab-specific technologies and information technology

This section quickly covers the next two elements of laboratory technology management at the same time, as many aspects of these elements have already been covered elsewhere. See Figure 10 and Figure 11 for a deeper dive into these two elements.[lower-alpha 8]

|

|

There is one point that needs to be made with respect to information technologies. While lab managers do not need to understand the implementation details of those technologies, they do need to be aware of the potential they offer in the development of a structure for laboratory automation implementations. Management is responsible for lab automation planning, including choosing the best technologies; in other words, management must manage the “big picture” of how technologies are used to meet their lab's purpose.

In particular, managers should pay close attention to the role of client-server systems and virtualization, since they offer design alternatives that impact the choice of products and the options for managing technology. This is one area where good relationships with IT departments are essential. We’ll be addressing these and other information technologies in more detail in other publications.

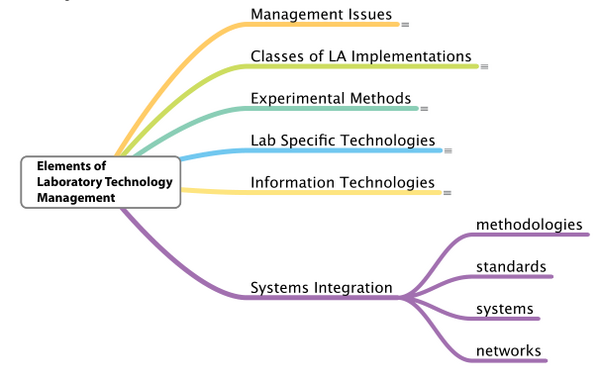

Systems integration

Systems integration is the final element of laboratory technology management, one that has been dealt with at length in other areas.[3][4] Many of the points noted above, particularly in the management sections, demand attention be paid to integration in order to develop systems that work well. When systems are planned, they need to be done with an eye toward integrating the components, something that today’s technologies are largely not capable doing as of yet (aside from those built around microplates and clinical chemistry applications). This isn’t going to happen magically, nor is it the province of vendors to define it. This is a realm that the user community has to address by defining the standards and methodologies for integration (Figure 12). The planning that managers have to do as part of technology management has to be done with an understanding of the role integration plays and an ability to choose solutions that lead to well-designed integrated systems. The concepts behind scientific manufacturing depend on it, just as integration is required in any efficient production process.

|

The purpose of integration in the lab is to make it easier to connect systems. For example, a CDS may need to pass information and data to a LIMS or ELN and then on to other groups. The resulting benefits of this ability to integrate systems include:

- smoother workflow, meaning less manual effort while avoiding duplication of data entry and data entry errors, something strived for and being accomplished in production environments, including manufacturing, video production, and graphics design;

- easier path for meeting regulatory requirements, as integrated systems, with integration built in by vendors, results in systems that are easier to validate and maintain;

- reduced cost of development and support;

- reduction in duplication of records via better data management; and

- more flexibility, as integrated systems built on modular components will make it easier to upgrade or update systems, and meet changing requirements.

The inability to integrate systems and components through vendor-provided mechanisms results in higher development and support costs, increased regulatory burden, and reduced likelihood that projects will be successful.

What is an integrated system in the laboratory?

Phrases like “integrated system” are used so commonly that it seems as though there should be instant recognition of what they are. While the words may bring a concept to mind, do we have the same concept in mind? For the sake of this discussion, the concept of an integrated system has several characteristics. First, in an integrated system, a given piece of information is entered once and then becomes available throughout the system, restricted only by access privileges. The word “system” in this case is the summation of all the information handling equipment in the lab. It may extend beyond the lab if process connections to other departments are needed. Second, the movement of materials (e.g., during sample preparation), information, and data is continuous from the start of a process through to the end of that process, without the need for human effort. The sequence doesn’t have to wait for someone to do a manual portion of the process in order for it to continue, aside from policy conditions that require checks, reviews, and approvals before subsequent steps are taken.

An integrated system should result in a better place for personnel to work as humans wouldn't be fully depended upon for conducting repetitive work. After all, leaving personnel to conduct repetitive work has several drawbacks. First, people get bored and make mistakes (some minor, some not, both of which contribute to variability in results), and second, the progress of work (productivity) is dependent on human effort, which may limit the number of hours that a process can operate. More broadly, it's also a bad way of using intelligent, educated personnel.

A brief historical note

For those who are new to the field, we’ve been working on system integration for a long time, with not nearly as much to show for it as we’d expect, particularly when compared to other fields that have seen an infusion of computer technologies. During the 1980s, the pages of Analytical Chemistry saw the initial ideas that would shape the development of automation in chemistry. Dr. Ray Dessy’s (then at Virginia Polytechnical Institute) articles on LIMS, robotics, networking, and IDS laid out the promise and expectation for electronic systems used to acquire and manage the flow of data and information throughout the lab.

That concept—the computer integrated lab—was the basis of work by instrument and computer vendors, resulting in proof-of-concept displays and exhibits at PITTCON and other trade shows. After more than 30 years, we are still waiting for that potential to be realized, and we may not be much closer today than we were then. What we have seen is an increase in the sophistication of the tools available for lab work, including client-server chromatography systems and ELNs in their varied forms. In each case, we keep running into the same problem: an inability to connect things into working systems. The result is the use of product-specific code and workarounds to moving and parsing data streams. These are fixes, not solutions. Solutions require careful design not just for the short-term "what-do-we-need-today" but also long term robust designs that permit graceful upgrades and improvements without the need to start over from scratch.

The cost of the lack of progress

Every day the scientists and technicians in your labs are working to produce the knowledge, information, and data (K/I/D) your company depends upon to meet its goals. That K/I/D is recorded in notebooks and electronic systems. How well are those systems going to support your need for access today, tomorrow, or over the next 20 or more years? This is the minimum most companies require for guaranteed access to data.

The systems being put in place to manage laboratory K/I/D are complex. Most laboratory data management systems (i.e., LIMS, ELN, IDS) are a combination of four separate products: hardware, operating system, database management system, and the application you and your staff uses, each from a different company with its own product life cycle. This means that changes can occur at any of those levels, asynchronously, without consideration for the impact they have on your ability to work.

Lab managers are usually trained in the sciences and personnel aspects of laboratory management. They are rarely trained in technology management and planning for laboratory robotics and informatics, the tools used today to get laboratory work done and manage the results. The consequences of inadequate planning can be significant:

In January 2006, the FBI ended the LIMS project, and in March 2006 the FBI and JusticeTrax agreed to terminate the contract for the convenience of the government. The FBI agreed to pay a settlement of $523,932 to the company in addition to the money already spent on developing the system and obtaining hardware. Therefore, the FBI spent a total of $1,380,151 on the project. With only the hardware usable, the FBI lost $1,175,015 on the unsuccessful LIMS project.[5]

Other instances of problems during laboratory informatics projects include:

- A 2006 Association for Laboratory Automation survey on the topic of industrial laboratory automation posed the following questions, with percentage of respondents agreeing in parentheses: My Company/Organization’s Senior Management Feels its Investment in Laboratory Automation Has: succeeded in delivering the expected benefits (56%); produced mixed results (43%); has not delivered the expected benefits (1%). 44% failed to fully realize expectations.[6]

- A long-circulated statistic says that some 60 percent of LIMS installations fail.[7]

- The Standish Group's CHAOS Report (1995) on project failures (looking at ERP implementations) shows that over half will fail, and 31.1% of projects will be canceled before they ever get completed. Further results indicate 52.7% of projects will cost over 189% of their original estimates.[8]

From a more anecdotal standpoint, we’ve received a number of emails discussing the results of improperly managing projects. Stories that stand out among them include:

- An anonymous LIMS customer was given a precise fixed-price quote somewhere around $90,000 and then got hit with several $100,000 in extras after the contract was signed.

- An anonymous major pharmaceutical company some years back had implemented a LIMS with a lot of customization that was generally considered to be successful, until it came time to upgrade. They couldn’t do it and went back to square one, requiring the purchase of another system.

- An anonymous business reports of robotics system failures totaling over $700,000.

- Some report vendors are using customer sites as test-beds for software development.

- A group of three different types of labs with differing requirements were trying to use the same system to reduce costs; nearly $500,000 was spent before the project was cancelled.

In addition to those costs, there are the costs of missed opportunities, project delays, departmental and employee frustration, and the fact that the problems you wanted to solve are still sitting there.

The causes for failures are varied, but most include factors that could have been avoided by making sure those involved were properly trained. Poor planning, unrealistic goals, inadequate specifications (including a lack of regulatory compliance requirements), project management difficulties, scope creep, and lack of experienced resources can all play a part in a failed laboratory technology project. The lack of features that permit the easy development of integrated systems can also be added to that list. That missing element can cause projects to balloon in scope, requiring people to take on work that they may not be properly prepared for, or projects that are not technically feasible, something developers don’t realize until they are deeply involved in the work.

The methods people use today to achieve integration results in cost overruns, project failures, and systems that can’t be upgraded or modified without significant risk of damaging the integrity of the existing system. One individual reported that his company’s survey of customers found that systems were integrated in ways that prevented upgrades or updates; the coding was specific to a particular version of software, and any changes could result in scrapping the current system and starting over.

One way of achieving “integration” is similar to how one might integrate household wiring by hard-wiring all the lamps, appliances, TV’s, etc. to the electrical cables. Everything is integrated, but change isn’t possible without shutting off the power to everything, going into the wall and making the wiring changes, and then repairing the walls and turning things back on. When considering systems integration, that’s not the model we’re considering; however, from the comments we’ve received, it is the way people are implementing software. We’re looking for the ability to connect things in ways that permit change, like the wiring in most households: plug and unplug. That level of compatibility and integration results from the development of standards for power distribution and for connections: the design of plugs and sockets for specific voltages, phasing, and polarity so that the right type of power is supplied to the right devices.

Of course, there are other ways to practically connect systems, new and old. The Universal Serial Bus (USB) standard allows the same connector to be used for connecting portable storage, cameras, scanners, printers, and other communications devices with a computer. Another older example can be found with modular telephone jacks and tone dialing, which evolved to the more mobile system we have today. However, we probably wouldn't have the level of sophistication we have now if we relied on rotary dials and hard-wired phones.

These are just a few examples of component connections that can lead to systems integration. When we consider integrating systems in the lab, we need to look at connectivity and modularity (allowing us to make changes without tearing the entire system apart) as goals.

What do we need to build integrated systems?

The lab systems we have today are not built for system-wide integration. They are built by vendors and developers to accomplish a specific set of tasks; connections to other systems is either not considered or avoided for competitive reasons. If we want to consider the possibility of building integrated systems, there are at least five elements that are needed:

- Education

- User community commitment

- Standards (e.g., file formatting, messaging, interconnection)

- Modular systems

- Stable operating system environment

Education: Facilities with integrated systems are built by people trained to do it. This has been discussed within the concept of LAEs, published in 2006.[9][lower-alpha 9] However, the educational issues don’t stop there. Laboratory management needs to understand their role in technology management. It isn’t enough to understand the science and how to manage people, as was the case 30 or 40 years ago. Managers have to understand how the work gets done and what technology is used to do it. The effective use (or the unintended misuse) of technologies can have as big an impact on productivity as anything else. The science also has to be adjusted for advanced lab technologies. Method development should be done with an eye toward method execution, asking "can this technique be automated?"

User community commitment: Vendors and developers aren’t going to provide the facilities needed for integration unless the user community demands them. Suppliers are going to have to spend resources in order to meet the demands for integration, and they aren’t going to do this unless there is a clear market need and users force them to meet that need. If we continue with “business as usual” practices of force fitting things together and not being satisfied with the result, where is the incentive for vendors to spend development money? The choices come down to these: you only purchase products that meet your needs for integration, you spend resources trying to integrate systems that aren’t designed for it, or your labs continue to operate as they have for the last 30 years, with incremental improvements.

Standards: Building systems that can be integrated depend upon two elements in particular: standardized file formats and messaging or interconnection systems that permit one vendor’s software package to communicate with another’s.

First, the output of an instrument should be packaged in an industry standardized file format that allows it to be used with any appropriate application. The structure of that file format should be published and include the instrument output plus other relevant information such as date, time, instrument ID, sample ID (read via barcode or other mechanism), instrument parameters, etc. Digital cameras have a similar setup for their raw data files: the pixel data and the camera metadata that tells you everything about the camera used to take the shot.

In the 1990s, the Analytical Instrument Association (AIA) (now the Analytical and Life Science Systems Association) had a program underway to develop a set of file format standards for chromatography and mass spectrometry. The program made progress and was turned over to ASTM, where momentum stalled. It was a good first attempt. There were several problems with it that bear noting. The first problem is found in the name of the standard: the Analytical Data Interchange (ANDI) standard.[10] It was viewed as a means of transferring data between instrument systems and served as a secondary file format, with the instrument vendors being the primary format. This has regulatory implications since the Food and Drug Administration (FDA) requires storage of the primary data and that the primary data is used to support submissions. It also means that files would have to have been converted between formats as it moved between systems.

A standardized file format would be ideal for an instrumental technique. Data collected from an instrument would be in that format and be implemented and used by each vendor. In fact, it would be feasible to have a circuit board in an instrument that would function as a network node. It would collect and store instrument data and forward it to another computer for long-term storage, analysis and reporting, thus separating data collection and use. A similar situation currently exists with instrument vendors that use networked data collection modules. The issue is further complicated by the nature of analytical work. A data file is meaningless without its associated reference material: standards, calibration files, etc., that are used to develop calibration curves and evaluate qualitative and quantitative results.

While file format standards are essential, so is a second-order description: sample set descriptors that provide a context for each sample’s data file (e.g., a sample set might be a sample tray in an autosampler, and the descriptor would be a list of the tray’s contents). Work is underway for the development of another standard for laboratory data: ASTM WK23265 - New Specification for Analytical Information Markup Language. Its description indicates that it does take the context of the sample—its relationship to other samples in a run or tray—into account as part of the standard description.[11]

The second problem with the AIA’s program was that it was vendor-driven with little user participation. The transfer to ASTM should have resolved this, but by that point user interest had waned. People had to buy systems and they couldn’t wait for standards to be developed and implemented. The transition from proprietary file formats to standardized formats has to be addressed in any standards program.

The third issue with their program involved standards testing. Before you ask a customer to commit their work to a vendor’s implementation of a standard, they should have the assurance, through an independent third-party, that things work as expected.

Modular systems: The previous section notes that vendors have to assume that their software may be running in a stand-alone environment in order to ensure that all of the needed facilities are available to meet the user's needs. This can lead to duplication of functions. A multi-user instrument data system and a LIMS both have a need for sample login. If both systems exist in the lab, you’ll have two sample login systems. The issue can be compounded even further with the addition of more multi-instrument packages.

Why not break down the functionality in a lab and use one sample login module? It is simply a multi-user database system. If we were to do a functional analysis of the elements needed in a lab, with an eye toward eliminating redundancy and duplication while designing components as modules, integration would be a simpler issue. A modular approach—a system with a login module, lab management module, modules for data acquisition, chromatographic analysis, spectra analysis, etc.—would provide a more streamlined design, with the ability to upgrade functionality as needed. For example, a new approach to chromatographic peak detection, peak deconvolution, could be integrated into an analysis method without having to reconstruct the entire data system.

When people talk about modular applications, the phrase “LEGO-like” comes to mind. It is a good illustration of what we’d like to accomplish. The easily connectable blocks and components can be structured in a wide variety of items, all based on a simple standardized connection concept. There are two differences that we need to understand. With LEGOs, almost everything connects. In the lab, connections need to make sense. Secondly LEGOs are a single-vendor solution; unless you’re the vendor, that isn’t a good model. A LEGO-like multi-source model (including open source) of well-structured and well-designed and -supported modules that could be connected or configured by the user would be an interesting approach to the development of integrable systems.

Modularity would also be of benefit when upgrading or updating systems. With more functions distributed over several modules, the amount of testing and validation needed would be reduced. It should also be easier to add functionality. This isn’t some fantasy, this is what LAE is when you look at the entire lab environment rather than implementing products task-by-task in isolation.

Stable operating system environment: The foundation of an integrated system must be a stable operating environment. Operating system upgrades that require changes in applications coding are disruptive and lead to a loss of performance and integrity. It may be necessary to forgo the bells and whistles of some commercial operating systems in favor of open-source software that provides required stability. Upgrades should be improvements in quality and functionality where that change in functionality has a clear benefit to the user.

The elements noted above are just introductory commentary; each could fill a healthy document by itself. At some point, these steps are going to have to be taken. Until they are, and they result in tools you can use, labs—your labs—are going to be committing the results of your work into products and formats you have little control over. That should not be an acceptable situation; the use of proprietary file formats that limit your ability to work with your company’s data should end and be replaced with industry-standard formats that give you the flexibility to work as you choose, with whatever products you need.

We need to be deliberate in how we approach this problem. When discussing file format standards, it was noted that the data file for a single sample is useless by itself. If you had the file for a chromatogram for instance, you could display it and look at the conditions used to collect it; however, interpretation requires data from other files, so standards for file sets have to be developed. That wasn’t a consideration in the original AIA work on chromatography and mass spectrometry (though it was in work done on Atomic Absorption, Emission and Mass Spectroscopy Data Interchange Specification standards for the Army Corps of Engineers, 1995).

The first step in this process is for lab managers and IT professionals to become educated in laboratory automation and what it takes to get the job done. The role of management can’t be understated; they have to sign off on the direction work takes and support it for the long haul. The education needs to focus on the management and implementation of automation technologies, not just the underlying science. After all, it is the exclusive focus on the science that leads to the silo-like implementations we have today. The user community's active participation in the process is central to success, and unless that group is educated in the work, the effect of that participation will be limited.

Secondly, we need to renew the development of industry-standard file formats, not just from the standpoint of encapsulating data files, but formats that ensure that the data is usable. The initial focus for each technique needs to be a review of how laboratory data is used, particularly with the advent of hyphenated techniques (e.g., Gas chromatography–mass spectrometry or GC-MS), and use that review as a basis for defining the layers of standards needed to develop a useable product. This is a complex undertaking but worth the effort. If you’re not sure, consider how much your lab’s data is worth and the impact of its loss.

In the short term, we need to start pushing vendors—you have the buying power—to develop products with the characteristics needed to allow you to work with and control the results of your lab’s work. Products need to be developed to meet your needs, not the vendor's. Product criterion needs to be set with the points above in mind, not on a company-by-company basis but as a community; you’re more likely to get results with a community effort.

Overcoming the barriers to the integration of laboratory systems is going to take a change in mindset on the part of lab management and those working in the labs. That change will result in a significant evolution in the way labs work, yielding higher productivity and a better working environment, with an improvement in the return on your company’s investment in your lab's operations. Laboratory systems need to be designed to be effective. The points noted here are one basis for that design.

Summary

That is a brief tour of what the major elements of laboratory technology management looks like right now. The diagrams will change and details will be left to additional layers to keep the structure easy to understand and use. One thing that was sacrificed in order to facilitate clarity is the relationship between technologies. For example, a robotics system might use data acquisition and control components in its operations, which could be noted by a link between those elements.

There is room for added complexity to the map. Someone may ask where bioinformatics or some other subject resides. That as well as other points—and there are a number of them —would be addressed in successive levels, giving the viewer the ability to drill down to whatever level of detail they need. The best way to view this is an electronic map that can be explored by clicking on subjects for added information and relationships.

An entire view of the diagram of the elements of laboratory technology can be found here (as a PDF).

Skills required for working with lab technologies

While this subject could have arguably been discussed in the management section above, we needed to wait until the major elements were described before taking up this critical point. In particular, we had to address the idea behind "scientific manufacturing."

Lab automation has an identity problem. Many people don’t recognize it as a field. It appears to be a collection of products and technologies that people can use as needed. Emphasis has shifted from one technology to another depending on what is new, hot, or interesting, with conferences and papers discussing that technology until something else comes along. Robotics, LIMS, and neural networks have all had their periods of intense activity, and now the spotlight is on ELNs, integration, and paperless labs.

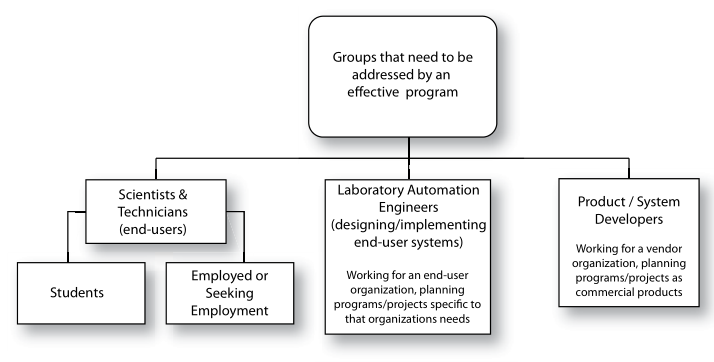

Lab automation needs to be addressed as a multi-disciplinary field, working in all scientific disciplines, by lab personnel, consultants, developers, and those in IT support groups. That means addressing three broad groups of people: scientists and technicians (i.e., the end users), LAEs (i.e., those designing, and implementing systems for the end users), and the technology developers (Figure 13).

|

Discussions concerning lab automation and the use of advanced technologies in lab work are usually done from the standpoint of the technologies themselves: what they are, what they do, benefits, etc. Missing from these conversations is an appreciation of the ability of those scientists and technicians—the end users—in the lab to use these tools, and how they will change the nature of laboratory work.

The application of analog electronic systems to laboratory work began in the early part of the twentieth century. For the most part, those systems made it easier for a scientist to make measurements. Recording spectrophotometers replaced wavelength-by-wavelength manual measurements, and process chromatographs automated sample taking, back-flush valves, attenuation changes, etc. They made it easier to collect measurements but did not change the analyst's job of data analysis. After all, analysts still had to look at each curve or chromatogram, make judgments, and apply their skills to making sense of the experiment. At this point, scientists were in charge of executing the science, while analog electronics made the science easier to deal with.

When processor-based systems were added to the lab’s tool set, things moved in a different direction. The computers could then perform the data acquisition, display, and analysis. This left the science to be performed by a program, with the analyst able to adjust the behavior of the program by setting numerical parameters. This represents a major departure in the nature of laboratory work, from scientist being completely responsible for the execution of lab procedures to allowing a computer-based system to take over control of all or a portion of the work.

For many labs, the use of increasingly sophisticated technologies is just a better way of individuals doing tasks better, faster, and with less cost. In others, the technology takes over a task and frees the analyst to do other things. We’ve been in a slow transition from people driving work to technology driving work. As the use of lab technologies moves further into automation, the practice of laboratory work is going to change substantially until we get to the point where scientific manufacturing and production is the dominant function: automation applied from sample acceptance to the final test or experimental result.

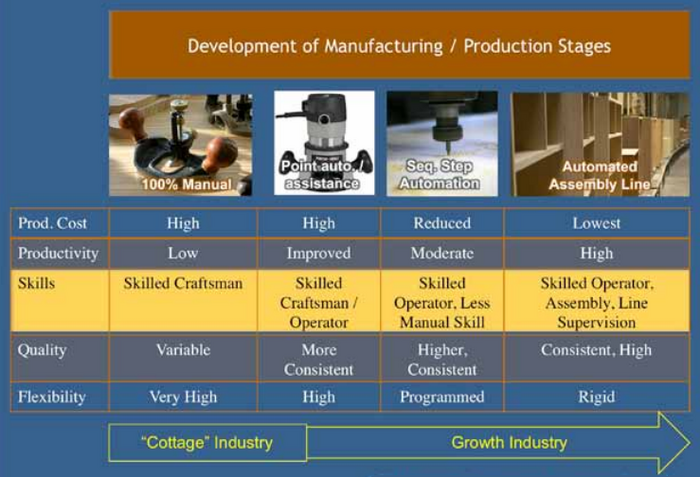

Development of manufacturing and production stages

We can get a sense of how work will change by looking at the development of manufacturing and production systems, where we see a transition from manual methods to fully automated production, in the end driven by the same issues as laboratories: a need or desire for high productivity, lower costs, and improved and consistent product results. The major difference is that in labs, the “product” isn’t a widget, it is information and data. In product manufacturing, we also see a reduction in manpower as a goal; in labs, it is a shift from manual effort to using that same energy to understand data and improve the science. One significant benefit from a shift to automation is that lab staff will be able to redesign lab processes—the science behind lab work—to function better in an automated environment; most of the processes and equipment in place today assume manual labor and are not well-designed for automated control.

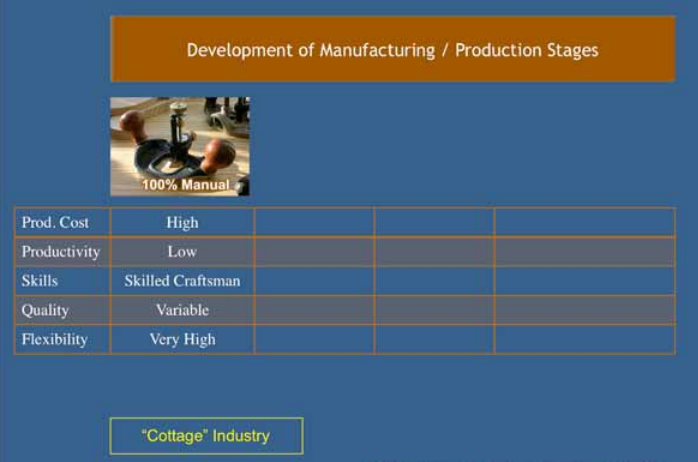

That said, we’re going to—by analogy—look at a set of manufacturing and production stages associated with wood working, e.g., making the components of door frames or moldings. The trim you see on wood windows and the framing on cabinet doors are examples of shaping wood. When we look at the stages of manufacturing or production, we have to consider five attributes of that production effort: relative production cost, productivity, required skills, product quality, and flexibility.

Initially, hand planes were used to remove wood and form trim components. Multiple passes were needed, each deepening the grooves and shaping the wood. It took practice, skill, and patience to do the work well and avoid waste. This was the domain of the craftsman, the skilled woodworker, who represents the first stage of production evolution. In terms of our evaluation, Figure 14 shows the characteristics of this first stage (we’ll fill in the table as we go along).

|

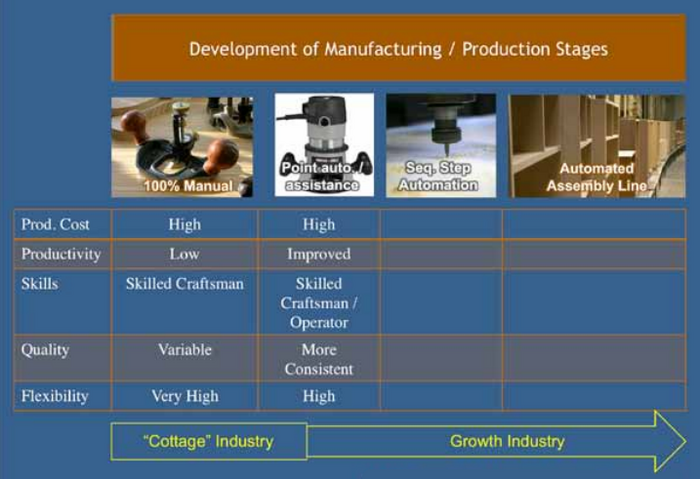

The next stage sees the craftsman turn to hand-operated, electric motor-driven routers to shape the wood. Instead of multiple passes with a hand plane, motor-driven set of bits removes material, leaving the finished product. A variety of cutting bits allow the craftsman to create different shapes. For example, a matching set of bits may be specially designed for the router to create the interlocking rails and stiles that frame cabinet doors.

Figure 15 shows the impact of this equipment on this second evolutionary stage of production. While still available to the home woodworker, the use of this equipment implies that the craftsman is going to be producing the shaped wood in quantity, so we are moving beyond level of production found in a cottage industry to the seeds of a growth industry. The cost of good quality routers and bits is modest and requires an investment in developing skills to use them effectively. Used well (and safely) they can produce good products; they can also produce a lot of waste if the individual isn’t properly schooled.

|

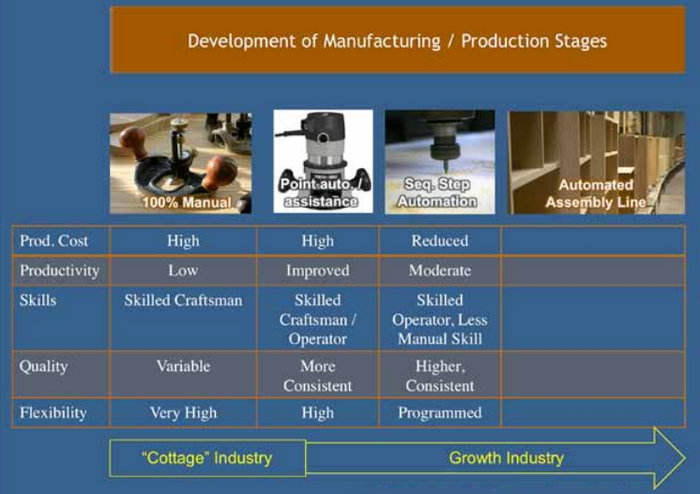

The third stage sees automated elements work their way into the wood working mix, with the multi-headed numerically controlled router. Instead of one hand-operated router-bit combination, there are four router-bit assemblies directed by a computer program to follow a specific path such that highly repeatable and precise cuts can be made. Of course, with the addition of multiple heads and software, the complexity of the product increases.

Figure 16 shows the impact of this equipment on the third evolutionary stage of production. We’ve moved from the casual woodworker to a full production operation. The cost of the equipment is significant, and the operators—both the program designer and the machine operator—have to be skilled in the use of the equipment to reduce mistakes and waste material. The “Less Manual Skill” notation under "Skills" indicates a transition point where we have moved almost entirely from the craftsman or woodworker to the skilled operator, requiring different skill sets than previous production methods. One of the side-effects of higher production is that if you make a design error, you can make out-of-specification product rather quickly.

|

From there, it's not a far jump to the final stage: a fully automated assembly line. Their inclusion completes the chart that we’ve been developing (Figure 17).

|

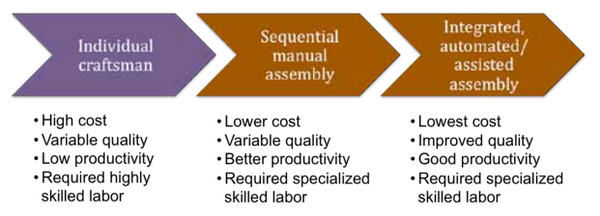

When we take the information from Figure 17, we can summarize the entire process as follows (Figure 18):

|

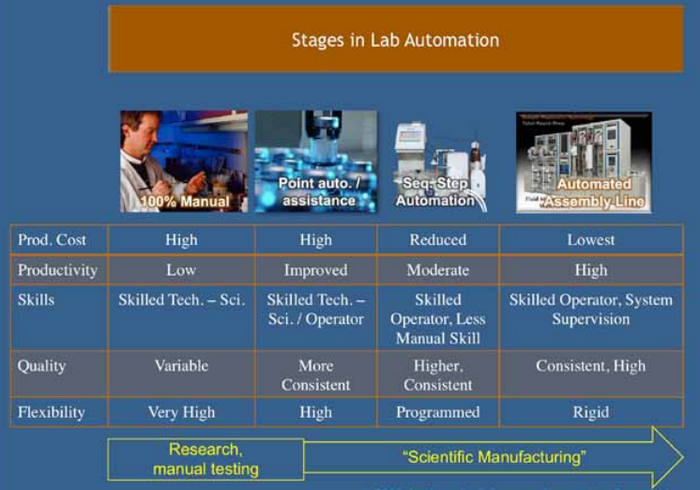

When we look at that summary, we can't help but notice that it translates fairly well when we replace "wood working" with "laboratory work," moving from the entirely manual processes of the skilled technician or scientist to the full automated scientific manufacturing and production process of the skilled operator or system supervisor. We visualize that in Figure 19. (The image in the last column of Figure 19 is of an automated extraction system from Fluid Management Systems, Watertown, MA.)

|

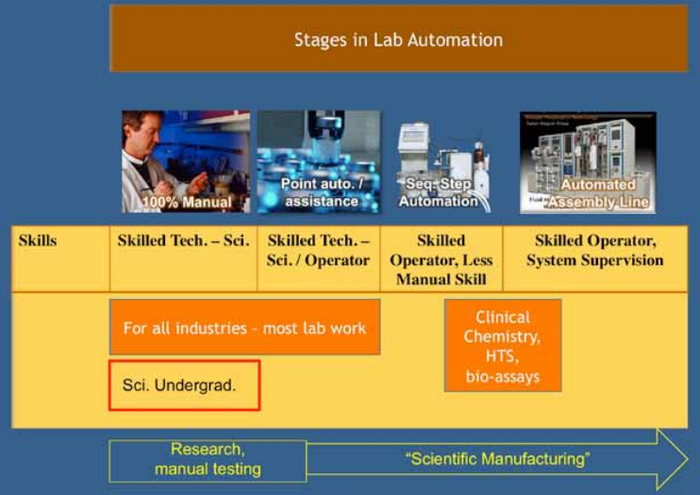

What does this all mean for laboratory workers

The skills needed today and in the future to work in a modern lab have changed significantly, and they will continue to change as automation takes hold. We’ve seen these changes occur already. Clinical chemistry, high-throughput screening (HTS), and automated bioassays using microplates are some examples.

The discussion here mirrors the development in the woodworking example. We’ll look at the changes in skills, using chromatography as an example. The following material is applicable to any laboratory environment, be it electronics, forensics, physical properties testing, etc. Chromatography is being used because of its wide application in lab work.

Stage 1: The analyst using manual methods

By “manual methods” we mean 100% manual work, including having the chromatographic detector output (an analog signal) recorded on a standard strip chart recorder and the pen trace getting analyzed by the hand, eye, and skill of the analyst. The process begins with the analyst finding out what samples need to be processed, finding those samples, and preparing them for injection into the instrument. The instrument has to be set up for the analysis, which includes installing the proper columns, adjusting flow rates, confirming component temperatures, and making sure that the instrument is working properly.

As each injection is done, the starting point for the data—a pen trace of the analog signal—is noted on the strip chart. This process is repeated for each sample and reference standard. Depending on the type of analysis, each sample’s data may take up to several feet of chart paper. The recording is a continuous trace and is a faithful representation of the detector output, without any filtering aside from attenuator adjustments (range selections to keep the signal recording within the limits of the paper; some peaks may peg the pen at the top of the chart because of their size in which case that data is lost) and electrical or mechanical noise reduction.

When all the injections have been completed, the analyst begins the evaluation of each sample’s data. That includes:

- inspecting the chromatogram for anomalies, including peaks that weren’t expected (possible contaminants), separations that aren’t as clear as they should be, noise, baseline drifts, and any other unusual conditions that would indicate a problem with that sample or the entire run of samples;

- taking the measurements needed for qualitative and/or quantitative analysis;

- developing the calibration curves; and

- making the calculations needed to complete the analysis.

The analysis would include any in-process control samples, and addressing issues with problem samples. The final step would be the administrative work, including checks of the work by another analyst, reporting results, and updating work request lists.

Stage 2: Point automation, applied to specific tasks

The next major development in this evolution is the introduction of automated injectors. Instead of the analyst spending the day injecting samples into the instrument's injection port, a piece of equipment does it, ushering in the first expansion of the analyst’s skill set. (In the previous stage, the analysis time is generally too short to allow the analyst to do anything else, so the analyst's day is spent injecting and waiting.) Granted, this doesn't represent a major change, but it is a change. It requires the analyst to confirm that the samples and standards are in the right order, that the right number of injections per sample are set, or that duplicate vials are put in the tray (duplicate injections get used to confirm that problems don't occur during the injection process). The analyst has to ensure that the auto-injector is connected to the strip chart recorder so that the injection timing mark is made automatically.

This simple change of adding an auto-injector to the process has some impact on the analyst's skill set. The same holds true for the use of automatic integrators, and sample preparation systems; in addition to understanding the science, the lab work takes on the added dimension of managing systems, trading labor for systems supervision with a gain of higher productivity.

Stage 3: Sequential-step automation

The addition of data systems to the sample analysis process train (from worklist generation to sample preparation to instrument analysis sequence) further reduces the amount of work the analyst does in sample analysis and changes the nature of the work performed. Starting with the simple integrators and moving on to advanced computer systems, the data system works with the auto-injector to start the instrument analysis phase of work, acquire the signal from the detector, convert it to a digital form, process that data (e.g., peak detection, area and peak height calculations, retention time), and perform the calculations needed for quantitative analysis. This yields less work with higher productivity.

While systems like this are common in labs today, there are problems, which we’ll address shortly.

Stage 4: Production-level automation (scientific manufacturing and production)

Depending on what is necessary for sample preparation, it may not be much of a stretch to have automated sample prep, injection, data collection, analysis, and reporting (with automated updates into a LIMS) performed in a small footprint with equipment available today. One vendor has an auto-injection system that is capable of dissolving material, performing extractions, mixing, and barcode reading, as well as other functions. Connect that to a chromatograph and data station, with programmed connection to a LIMS, and you have the basis of an automated sample preparation–chromatographic system. However, there are some issues that have to be noted and addressed.

The goal with such a system has to be high-volume, automated sample processing with the generation of high-quality data. The intent is to reduce the amount of work the analyst has to perform, ideally so that the system can run unattended. Note that “high-quality” in this case means to have a high level of confidence in the results. There is more to that than the ability to do calculations for quantitative analysis or having a validated system; you have to validate the right system.

Computer systems used in chromatographic analysis can be tuned to control how peaks are detected, what is rejected as noise, and how separations are identified so that baselines can be properly drawn and peak areas allocated. The analyst needs to evaluate the impact of these parameters for each analytical procedure and make sure that the proper settings are used.

As previously noted regarding manual processes, the inspection of the chromatogram for elements that don’t match the expectations for a well-characterized sample (the number of peaks that should be there, the type of separations between peaks, etc.) is vital. This screening of samples has to be applied to every sample whether by human eye or automated system, the latter giving lower labor costs and higher productivity. If we are going to build fully automated production systems, we have to be able to describe a screening template that is applied to every sample to either confirm that the sample fits the standard criteria or has to be noted for further evaluation. That “further evaluation” may be frustrated by not having the data system keep sufficient data for that evaluation, and require rerunning the sample.